Connect to Destination

This section details the steps to connect to a storage destination where ingested data will be written.

Overview

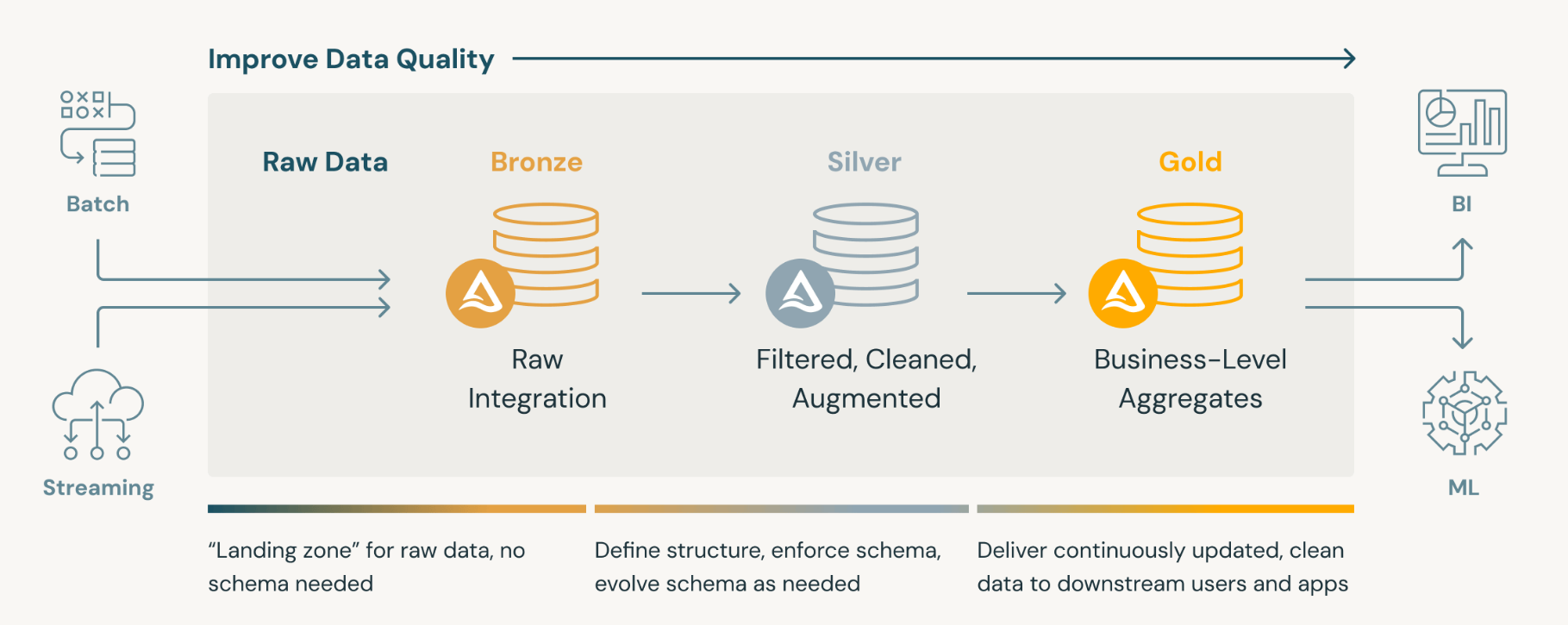

By default, ingested data is written to the customer's cloud storage account in Azure, AWS S3 or Google Cloud. DataStori follows Medallion Architecture and places ingested data in the following folders:

- Bronze folder: Incoming 'as is' raw data is written here.

- Silver folder: Deduplicated, flattened, and quality checked data is written into the Silver folder in the Delta format.

- Gold folder: Business level aggregated data resides in the Gold folder. DataStori does not directly write into the Gold folder.

Steps

In the Destinations tab, select '+ Add New Destination'.

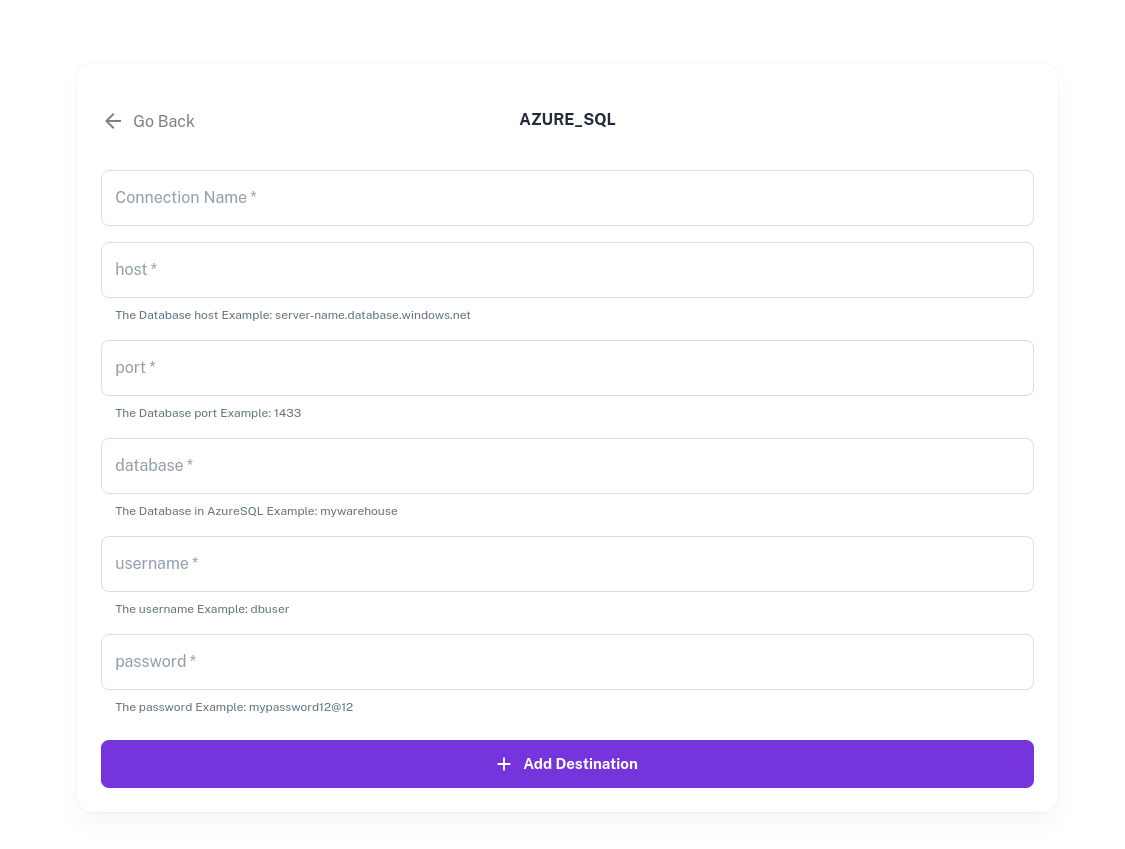

Select your data storage destination and fill out the connection form with your authentication and credentials. Destination credentials are encrypted and stored in the DataStori application.

Example: Azure SQL Connection Form

- If your destination is not in the list, please write to contact@datastori.io and we will set it up from the back-end. danger

DataStori cannot validate the destination credentials entered by a user.

If your database runs in a private subnet, please ensure that it is accessible to the servers running the data pipelines. The database does not need to be public or open to external tools.

Users can revoke or delete their connections at any time.

File Formats and Database Destinations

File Formats

In addition to the Delta format, DataStori can generate files in other formats including:

- Parquet

- Iceberg

- CSV

Database Destinations

DataStori can write to all the SQL Alchemy supported destinations listed here. Common destinations include:

- MySQL

- PostgreSQL

- Azure SQL

- Snowflake

- Microsoft SQL Server